Maintaining with an market as rapid-relocating as AI is a tall order. So proper till an AI can do it for you, right here’s a useful roundup of newest tales within the planet of machine discovering, together with notable examine and experiments we didn’t defend on their private.

This 7 days, Meta produced the most recent in its Llama sequence of generative AI variations: Llama 3 8B and Llama 3 70B. Able to inspecting and composing textual content, the categories are “open sourced,” Meta stated — speculated to be a “foundational piece” of gadgets that builders format with their distinctive goals in thoughts.

“We imagine that these are the best open supply variations of their course, interval,” Meta wrote in a web site submit. “We’re embracing the open supply ethos of releasing early and usually.”

There may be only one problem: the Llama 3 fashions aren’t actually “open useful resource,” at minimal not within the strictest definition.

Open supply implies that builders can use the fashions how they go for, unfettered. However within the circumstance of Llama 3 — as with Llama 2 — Meta has imposed positive licensing restrictions. For living proof, Llama variations can’t be utilized to coach different kinds. And software builders with over 700 million each month clients should request a particular license from Meta.

Debates above the definition of open supply aren’t new. However as suppliers within the AI place get pleasure from quick and unfastened with the expression, it’s injecting gasoline into very long-running philosophical arguments.

Closing August, a look at co-authored by researchers at Carnegie Mellon, the AI Now Institute and the Signal Basis found that a number of AI varieties branded as “open supply” seem with important catches — not simply Llama. The information vital to show the designs is held thriller. The compute electrical energy wanted to run them is previous the attain of quite a lot of builders. And the labor to great-tune them is prohibitively costly.

So if these varieties will not be actually open supply, what are they, precisely? Which is a superior dilemma defining open up useful resource with respect to AI just isn’t an easy endeavor.

1 pertinent unresolved concern is no matter whether or not copyright, the foundational IP mechanism open up useful resource licensing is based on, will be utilized to the quite a few components and components of an AI mission, specifically a mannequin’s interior scaffolding (e.g. embeddings). Then, there’s the mismatch between the notion of open provide and the way AI actually capabilities to overcome: open up supply was devised in element to ensure that builders might examine and modify code with out restrictions. With AI, whereas, which substances you need to do the researching and modifying is confide in interpretation.

Wading by way of all of the uncertainty, the Carnegie Mellon overview does make very clear the injury inherent in tech giants like Meta co-opting the phrase “open useful resource.”

Normally, “open supply” AI initiatives like Llama find yourself kicking off information cycles — free advertising and marketing — and giving advanced and strategic constructive features to the initiatives’ maintainers. The open up supply group nearly by no means sees these very same constructive features, and, once they do, they’re marginal compared to the maintainers’.

As a substitute of democratizing AI, “open supply” AI assignments — specifically these from huge tech suppliers — generally tend to entrench and improve centralized power, say the examine’s co-authors. Which is nice to take care of in ideas the upcoming time a primary “open supply” design launch comes throughout.

Listed here are another AI tales of observe from the sooner a number of days:

- Meta updates its chatbot: Coinciding with the Llama 3 debut, Meta upgraded its AI chatbot throughout Fb, Messenger, Instagram and WhatsApp — Meta AI — with a Llama 3-powered backend. It additionally launched new capabilities, together with speedier picture era and acquire to world-wide-web lookup outcomes.

- AI-created porn: Ivan writes about how the Oversight Board, Meta’s semi-unbiased plan council, is popping its discover to how the corporate’s social platforms are dealing with particular, AI-generated pictures.

- Snap watermarks: Social media help Snap plans to incorporate watermarks to AI-created photographs on its system. A translucent mannequin of the Snap emblem with a sparkle emoji, the brand new watermark will likely be extra to any AI-created picture exported from the app or saved to the digital digital camera roll.

- The brand new Atlas: Hyundai-owned robotics company Boston Dynamics has unveiled its following-generation humanoid Atlas robotic, which, in distinction to its hydraulics-powered predecessor, is all-electric — and significantly friendlier in total look.

- Humanoids on humanoids: To not be outdone by Boston Dynamics, the founding father of Mobileye, Amnon Shashua, has launched a brand new startup, Menteebot, targeting creating bibedal robotics methods. A demo on-line video demonstrates Menteebot’s prototype going for walks about to a desk and discovering up fruit.

- Reddit, translated: In an interview with Amanda, Reddit CPO Pali Bhat uncovered that an AI-run language translation attribute to convey the social group to a much more international viewers is within the performs, alongside with an assistive moderation gadget skilled on Reddit moderators’ previous decisions and steps.

- AI-produced LinkedIn data: LinkedIn has quietly began out screening a brand new strategy to increase its revenues: a LinkedIn Top quality Enterprise Internet web page membership, which — for prices that look to be as steep as $99/thirty day interval — contain AI to jot down articles and a set of sources to develop follower counts.

- A Bellwether: Google dad or mum Alphabet’s moonshot manufacturing unit, X, this week unveiled Enterprise Bellwether, its most modern bid to make use of tech to a few of the world’s most vital troubles. Under, which means using AI instruments to find out pure disasters like wildfires and flooding as quickly as achievable.

- Shielding youngsters with AI: Ofcom, the regulator charged with implementing the U.Ok.’s On-line Safety Act, choices to begin an exploration into how AI and different automated devices will be utilised to proactively detect and take away illegal articles on-line, particularly to guard youngsters from hazardous materials.

- OpenAI lands in Japan: OpenAI is rising to Japan, with the opening of a brand new Tokyo workplace and plans for a GPT-4 design optimized exactly for the Japanese language.

Rather more gadget learnings

Picture Credit: DrAfter123 / Getty Photos

Can a chatbot modify your head? Swiss scientists noticed that not solely can they, but when they’re pre-armed with some personal information about you, they will basically be much more persuasive in a debate than a human with that actual information.

“That is Cambridge Analytica on steroids,” talked about problem lead Robert West from EPFL. The researchers suspect the design — GPT-4 on this case — drew from its broad retailers of arguments and specifics on the web to current a way more compelling and self-confident scenario. However the end result kind of speaks for alone. Don’t undervalue the flexibility of LLMs in problems with persuasion, West warned: “Within the context of the upcoming US elections, individuals at present are involved as a result of the truth that’s the place this type of expertise is normally to begin with battle examined. One specific issue we all know for constructive is that individuals at present will likely be utilizing the power of great language variations to check out to swing the election.”

Why are these designs so superior at language anyway? That’s one place there’s a intensive historic previous of research into, probably once more to ELIZA. For those who’re interested by an individual of the parents who’s been there for a complete lot of it (and carried out no compact complete of it himself), check out this profile on Stanford’s Christopher Manning. He was simply awarded the John von Neuman Medal congrats!

In a provocatively titled interview, an extra very long-expression AI researcher (who has graced the TechCrunch section as very effectively), Stuart Russell, and postdoc Michael Cohen speculate on “Methods to preserve AI from killing us all.” Doubtless a improbable element to determine faster pretty than in a while! It’s not a superficial dialogue, nonetheless — these are good individuals chatting about how we are able to principally absolutely grasp the motivations (if that’s the correct phrase) of AI kinds and the way polices ought to to be constructed near them.

The job interview is actually concerning a paper in Science posted earlier this month, by which they counsel that extremely developed AIs able to performing strategically to realize their ambitions, which they name “long-term organising brokers,” might maybe be unachievable to verify. Primarily, if a design learns to “perceive” the screening it should go with a purpose to succeed, it might effectively extraordinarily correctly discover out methods to creatively negate or circumvent that checks. Now we have discovered it at a small scale, why not a big one?

Russell proposes proscribing the elements needed to make these sorts of brokers… however in fact, Los Alamos and Sandia Nationwide Labs simply purchased their deliveries. LANL simply skilled the ribbon-chopping ceremony for Venado, a brand new supercomputer meant for AI examine, composed of two,560 Grace Hopper Nvidia chips.

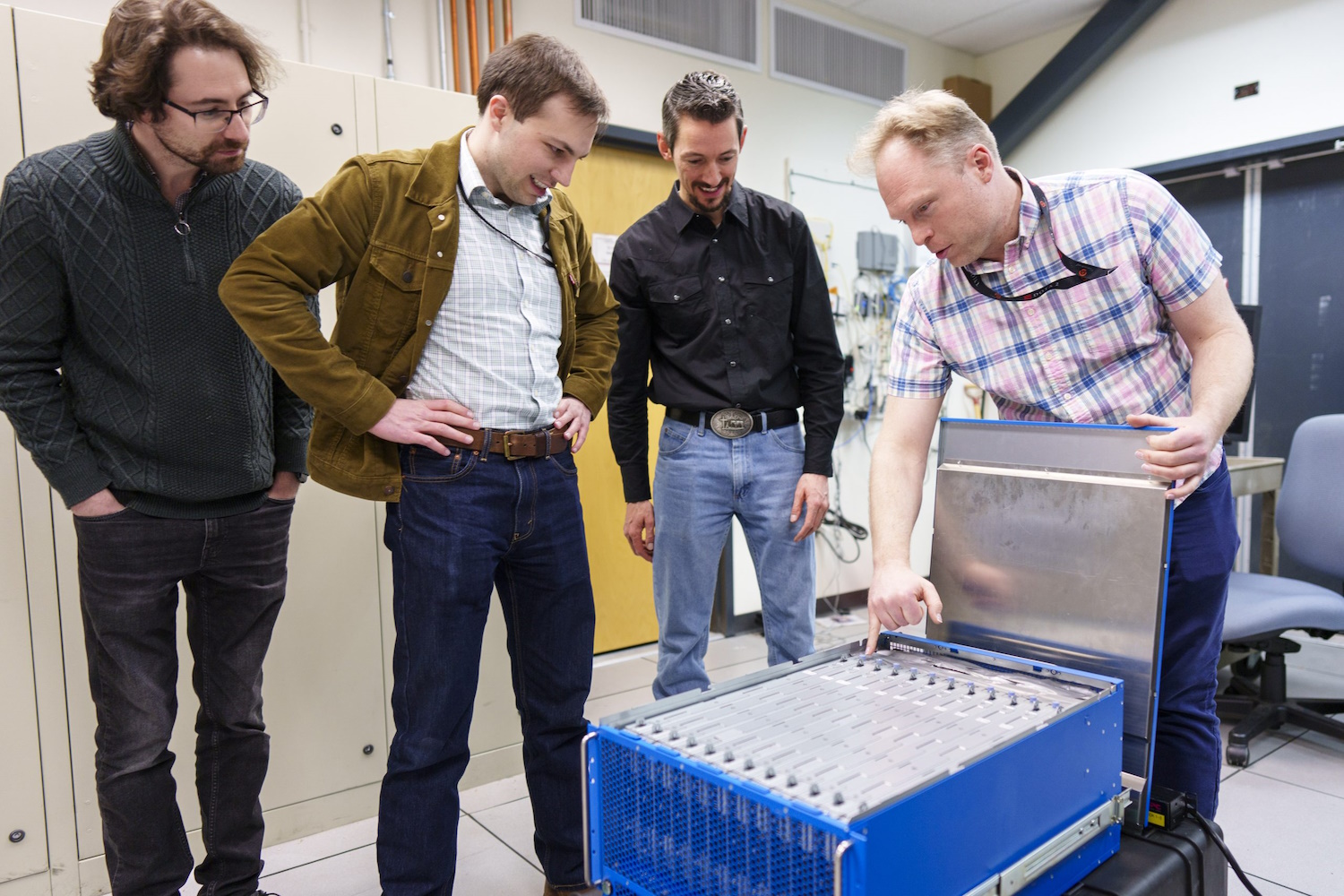

Researchers search into the brand new neuromorphic laptop.

And Sandia simply obtained “an unbelievable brain-primarily based mostly computing program named Hala Stage,” with 1.15 billion artificial neurons, designed by Intel and regarded to be the premier this form of system within the globe. Neuromorphic computing, as it’s known as, just isn’t meant to vary applications like Venado, however to go after new procedures of computation which are much more mind-like than the considerably statistics-concentrated methodology we see in modern-day merchandise.

“With this billion-neuron process, we may have a chance to innovate at scale every new AI algorithms which may be far more productive and smarter than current algorithms, and new mind-like strategies to current laptop algorithms this form of as optimization and modeling,” reported Sandia researcher Brad Aimone. Appears dandy… simply dandy!

Browse a lot more on techcrunch