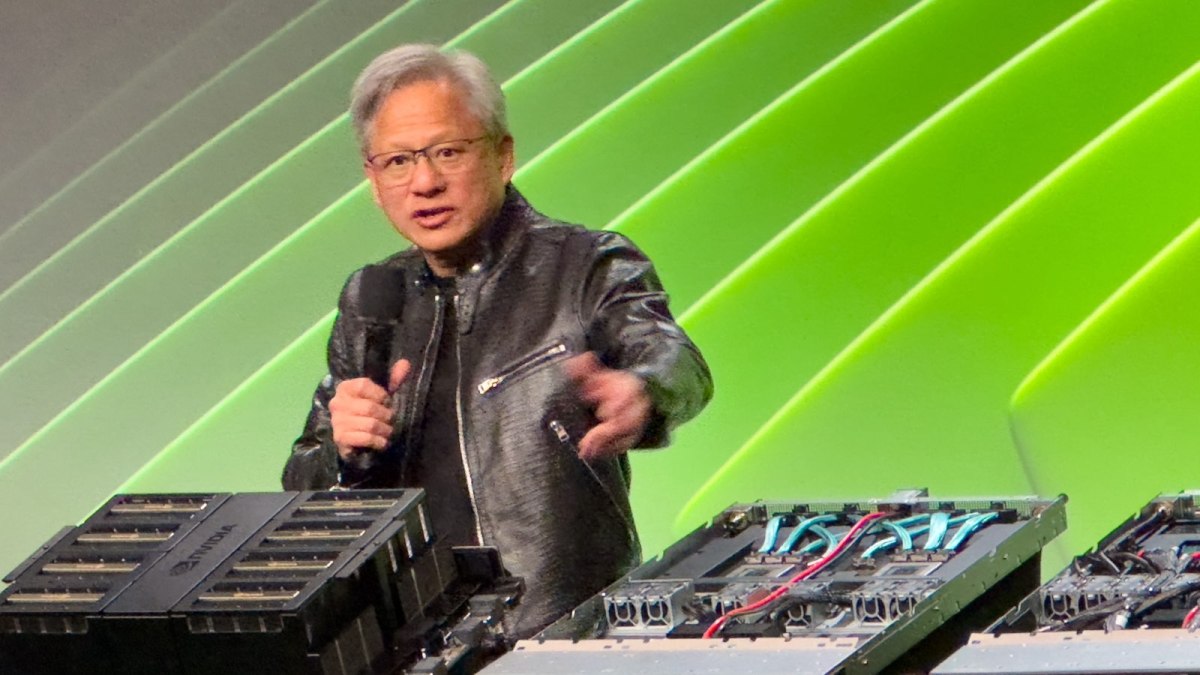

Artificial Widespread Intelligence (AGI) — sometimes called “robust AI,” “full AI,” “human-stage AI” or “common good motion” — represents a serious future leap within the self-discipline of artificial intelligence. Not like slender AI, which is personalized for particular duties (these as detecting merchandise flaws, summarize the information, or make you a web-site), AGI will likely be succesful to conduct a large spectrum of cognitive tasks at or above human levels. Addressing the push this week at Nvidia’s once-a-year GTC developer assembly, CEO Jensen Huang seemed to be acquiring really bored of discussing the difficulty – not least primarily as a result of he finds himself misquoted an ideal deal, he states.

The frequency of the priority could make feeling: The precept raises existential ideas about humanity’s position in and administration of a possible precisely the place tools can outthink, outlearn and outperform human beings in nearly each single space. The core of this drawback lies within the unpredictability of AGI’s determination-making processes and goals, which can probably not align with human values or priorities (a thought explored in depth in science fiction contemplating that not less than the Forties). There’s subject that as quickly as AGI reaches a specific quantity of autonomy and performance, it might probably turn out to be unimaginable to comprise or handle, resulting in conditions the place its steps merely can’t be predicted or reversed.

When sensationalist press asks for a timeframe, it’s continuously baiting AI gurus into inserting a timeline on the end of humanity — or at minimal the current establishment. Evidently, AI CEOs will not be typically eager to cope with the difficulty.

Huang, however, expended a while telling the push what he does consider in regards to the topic. Predicting after we will see a satisfactory AGI depends upon on the way you define AGI, Huang argues, and attracts a few parallels: Even with the problems of time-zones, you understand when new calendar yr occurs and 2025 rolls throughout. In case you’re driving to the San Jose Convention Middle (precisely the place this yr’s GTC convention is at present being held), you often know you might have arrived when you possibly can see the super GTC banners. The crucial place is that we will concur on how you can consider that you’ve got arrived, regardless of whether or not temporally or geospatially, the place you had been hoping to go.

“If we specified AGI to be one factor fairly exact, a established of assessments the place by a software program technique can do fairly properly — or in all probability 8% improved than most individuals at this time — I believe we are going to get there inside 5 a few years,” Huang clarifies. He means that the checks might be a licensed bar take a look at, logic checks, monetary assessments or possibly the potential to go a pre-med take a look at. Except the questioner is able to be fairly particular about what AGI implies within the context of the query, he’s not able to make a prediction. Sincere adequate.

AI hallucination is solvable

In Tuesday’s Q&A session, Huang was questioned what to do about AI hallucinations – the inclination for some AIs to make up options that appear believable, however will not be centered in level. He appeared visibly annoyed by the issue, and immediate that hallucinations are solvable simply – by incomes certain that options well-researched.

“Add a rule: For almost each one reply to, it’s important to search up the reply to,” Huang claims, referring to this apply as ‘Retrieval-augmented period,’ describing an tactic extraordinarily similar to basic media literacy: Analyze the supply, and the context. Consider the main points contained within the supply to acknowledged truths, and if the response is factually inaccurate – even partly – discard all the supply and transfer on to the up coming one explicit. “The AI shouldn’t simply answer, it ought to do evaluation first, to determine which of the solutions are the best.”

For mission-significant options, reminiscent of well being info or equal, Nvidia’s CEO implies that in all probability checking many sources and regarded sources of actual fact is the best way forward. Of coaching course, this often signifies that the generator that’s constructing an response requires to have the answer to say, ‘I don’t know the answer to your concern,’ or ‘I simply can’t get to a consensus on what the right reply to this question is,’ and even one thing like ‘hey, the Superbowl hasn’t transpired but, so I actually do not know who obtained.’