Have you ever ever puzzled how AI fashions can assist talk advanced concepts extra clearly and accessibly? In Chequeado’s AI Laboratory, we evaluated the efficiency of GPT-4, Claude Opus, Llama 3, and Gemini 1.5 in simplifying excerpts from articles on economics, statistics, and elections, evaluating their outcomes with variations generated by people. For this, we carried out a guide technical analysis and in addition a survey of potential readers to know their preferences.

One of many most important learnings from this work, which was pushed by the ENGAGE fund granted by IFCN, was the significance of format when conveying advanced ideas. The fashions that structured the knowledge in a extra accessible means for the person expertise obtained higher leads to the survey of potential readers. Claude Opus’ solutions have been probably the most preferred by customers. Behind have been Llama 3, Gemini 1.5, and the responses written by a human. The three most chosen fashions used bullet factors or question-answer format when rewriting the texts.

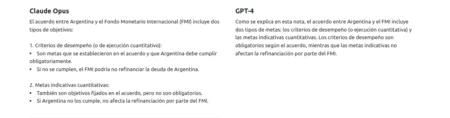

Though GPT-4 scored higher than the opposite fashions within the technical analysis, it was relegated to the final place in person rankings, though this consequence might be associated to the truth that it revered the unique format of the paragraphs when rewriting the texts. Within the technical analysis, GPT-4 stood out for its potential to respect the model and format of the unique textual content, with out including additional info or producing false content material. Claude Opus sometimes added summaries on the finish of the unique texts that had not been requested. Alternatively, Llama and Gemini 1.5 confirmed difficulties in sustaining the unique model and sources, and on a number of events launched new info that was not current within the unique textual content.

Handbook analysis outcomes

Our first activity was to investigate the technical efficiency of every mannequin based on numerous metrics:

- Job compliance: Does the mannequin simplify the textual content with out shedding related info?

- Doesn’t add new info: Does it keep away from together with knowledge or opinions not current within the unique?

- Respects model: Does it keep the tone and elegance of the unique textual content?

- Respects format: Does it protect the construction of paragraphs and sections from the unique?

- Maintains sources: Does it protect citations and references to exterior sources?

We evaluated the typical efficiency of every mannequin utilizing a visitors gentle system (inexperienced/yellow/pink). The analysis indicated that every one fashions revered the duty.

GPT-4 obtained the perfect outcomes on this analysis, because it revered format, model, and didn’t add new info, though on some events it misplaced citations or reference sources current within the unique textual content. Claude, regardless of not including false info, included unsolicited remaining summaries. Alternatively, it was the one which finest preserved the unique citations and sources, though it altered the format a number of instances so as to add lists and subtitles and divide into sections. Llama refused to reply questions on elections in some assessments. All fashions besides GPT-4 generated new codecs with titles, questions, shorter sections, and lists to facilitate understanding, even in instances the place the duty included the phrase “Respecting the unique format.”

Consumer preferences

After finishing the guide analysis of technical efficiency, we carried out a survey the place 15 customers participated in 5 rounds the place that they had to decide on between two variations of simplified texts (or declare a tie). Every textual content was generated by one of many fashions or by a journalist.

The outcomes revealed a choice amongst respondents for modified codecs with bulleted lists and question-and-answer sections. This means that format is as vital as content material in making advanced ideas accessible. This may occasionally clarify why GPT-4, which had been the perfect mannequin when it comes to the guide analysis standards we outlined, was the least chosen by customers.

If we consider the outcomes by the format of the response, we have now on the one hand the triad Claude, Gemini, and Llama, the place Claude takes a large lead towards the opposite two fashions, though all three use related codecs, and then again, we have now the human model and that of GPT-4, which respect the unique format of the textual content. The human model was chosen 54% of the time towards GPT-4’s 32%, which got here final.

Time saving

On common, an individual takes about 3 minutes to simplify a 50-word paragraph. Due to this fact, reworking a 500-word article would take round half-hour of human work. Though utilizing the fashions permits us to acquire a clearer and better-formatted model rapidly, it’s also vital to contemplate the time wanted for the textual content generated by AI to be reviewed and validated by an individual earlier than publication, a time dedication that might differ relying on the response obtained and the complexity requiring human supervision.

Some key learnings

- Format is the important thing: we discovered that fashions that changed the unique format (including titles, lists, and so forth.) generated clearer and extra engaging texts for readers. Though this makes it tough to match outcomes, it’s a essential studying for our writing work: if we need to higher talk advanced ideas, format is as vital as content material.

- Conducting a curation means of prompts (directions given to the fashions) previous to analysis means important time financial savings and is value taking period of time to curate and modify the directions as a lot as potential for the assessments. It is very important use a decreased variety of prompts for analysis, because the variety of assessments will increase considerably with every immediate added.

- The attitude of potential readers or customers supplies loads of info and readability to the method and permits us to higher perceive what works and why in an actual surroundings of software of those methods.

Methodology

To hold out this experiment, we adopted the next steps:

- We chosen 6 excerpts from articles with advanced ideas to make use of as take a look at enter.

- We developed 3 promptsto information the fashions. This course of concerned evaluating completely different prompting methods to realize the absolute best outcomes. In case you are fascinated by studying extra about prompting, we suggest this information.The immediate that generated the perfect outcomes was:

“Context: Think about you’re a knowledge journalist specialised in UX writing and fact-checking.

Job: Respecting the unique format, rewrite the next textual content in a means that’s extra readable, accessible, and clear, with out shedding any of the unique info. The textual content must be comprehensible by a highschool scholar.

Textual content:” [input text to simplify]

- After crossing every immediate (level 2) with every textual content excerpt (from level 1) with the 4 fashions chosen for this analysis, we generated 72 responses of simplified texts for comparability.

- Manually, we evaluated the compliance with the duty, consistency, model, and format for every of the responses we generated and constructed a efficiency scale in every class for every of the fashions.

- So as to add the subjective choice perspective of individuals and their opinion concerning which of the simplified variations have been clearer, we carried out a survey to know which fashions higher met the duty based on potential readers’ consideration.

Conclusion

We developed this small experiment with the thought of studying how AI fashions may assist us simplify advanced ideas, but additionally to know, by way of observe, how we will construct methods to judge these fashions in new duties.

Within the guide analysis, GPT-4 stood out for its potential to fulfill the duties and respect the unique format and elegance and never generate additional info or hallucinate, whereas different fashions had issues and tended to incorporate extra components or change the model of the content material. Nevertheless, person preferences revealed the significance of format and visible presentation in perceived readability. Texts with bullet factors, question-and-answer sections, and different visible components have been persistently extra chosen, even once they have been generated by fashions that didn’t strictly respect the unique activity.

This taught us that when simplifying advanced ideas, we should pay as a lot consideration to format as to the content material itself. In abstract, though human evaluation stays important, the power of fashions to generate simplified and well-formatted variations can considerably cut back the time spent on this activity. The problem now’s to proceed exploring and refining these instruments in new duties and challenges that enable us to proceed introducing expertise into the fact-checking subject to enhance our work.

Disclaimer: This textual content was structured and corrected with the help of Claude Opus.